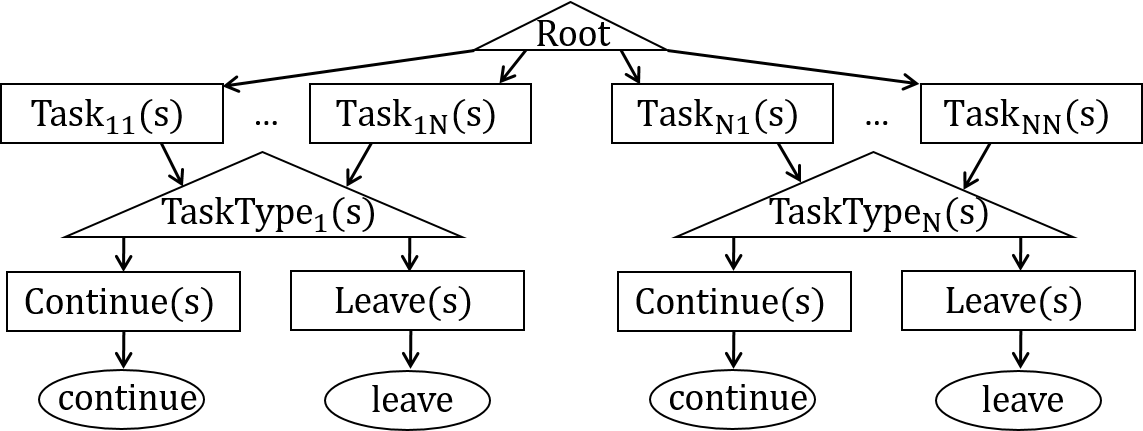

A hierarchical decomposition of the task-interleaving problem: subroutines are triangles, rectangles are composite actions and primitive actions are ovals. Root chooses among all available task instances, e.g., Task11(s), which in turn call the subroutine of their respective type, e.g., TaskType1(s). A subroutine can either continue Continue(s) or leave Leave(s) a task.

Abstract

How do people decide how long to continue in a task, when to switch, and to which other task? It is known that task interleaving adapts situationally, showing sensitivity to changes in expected rewards, costs, and task boundaries. However, the mechanisms that underpin the decision to stay in a task vs. switch away are not thoroughly understood. Previous work has explained task interleaving by greedy heuristics and a policy that maximizes the marginal rate of return. However, it is unclear how such a strategy would allow for adaptation to environments that offer multiple tasks with complex switch costs and delayed rewards. Here, we develop a hierarchical model of supervisory control driven by reinforcement learning (RL). The core assumption is that the supervisory level learns to switch using task-specific approximate utility estimates, which are computed on the lower level. We show that a hierarchically optimal value function decomposition can be learned from experience, even in conditions with multiple tasks and arbitrary and uncertain reward and cost structures. The model also reproduces well-known key phenomena of task interleaving, such as the sensitivity to costs of resumption and immediate as well as delayed in-task rewards. In a demanding task interleaving study with 211 human participants and realistic tasks (reading, mathematics, question-answering, recognition), the model yielded better predictions of individual-level data than a flat (non-hierarchical) RL model and an omniscient-myopic baseline. Corroborating emerging evidence from cognitive neuroscience, our results suggest hierarchical RL as a plausible model of supervisory control in task interleaving.

Additional Results

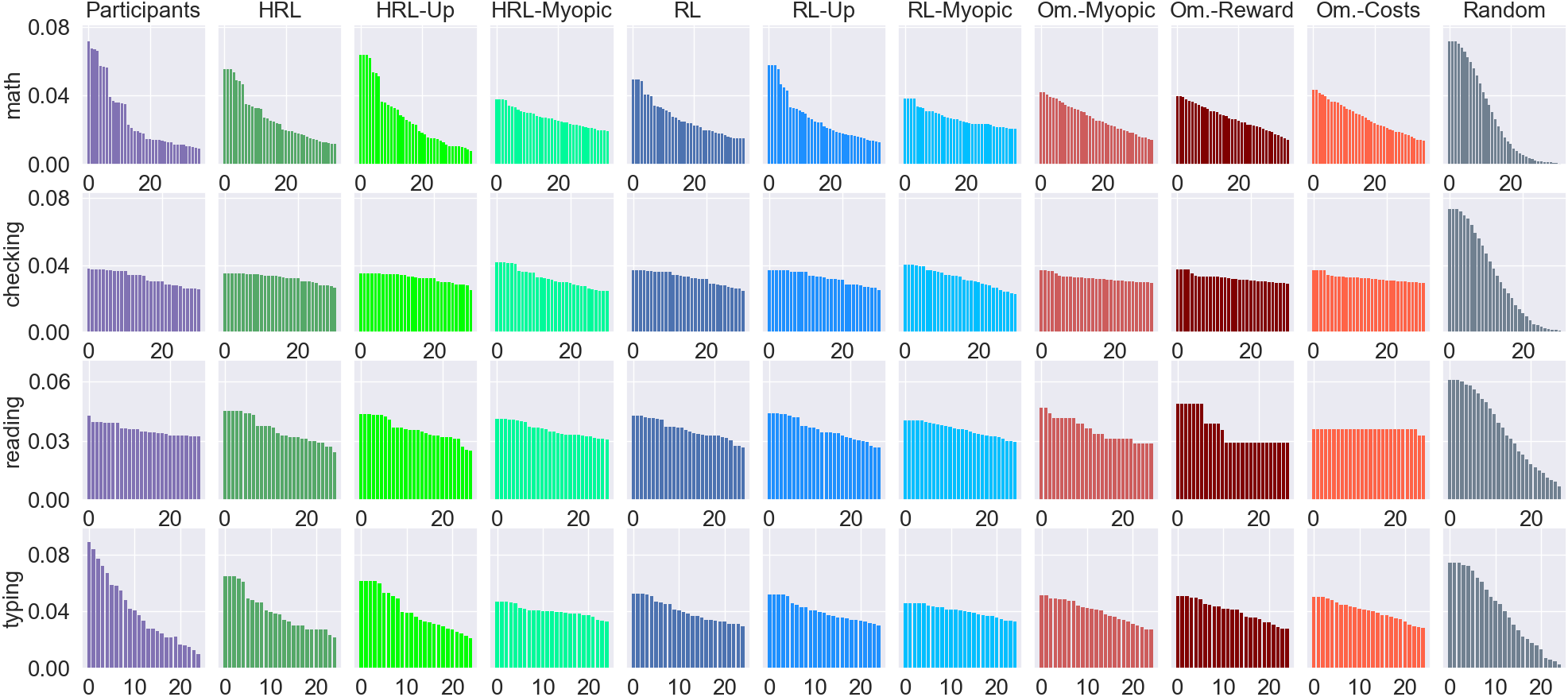

State visitations: HRL shows better match with state visitation patterns than Myopic and Random. Y-axis shows fraction of states visited aggregated over all trials.

License

This Dataset is released under the CC BY-NC-SA 4.0 license with additional conditions and terms.

Please refer to the complete license file here.

Downloads

- The Task Interleaving Dataset is available on request. Please fill in this Google Form to gain access to the dataset.