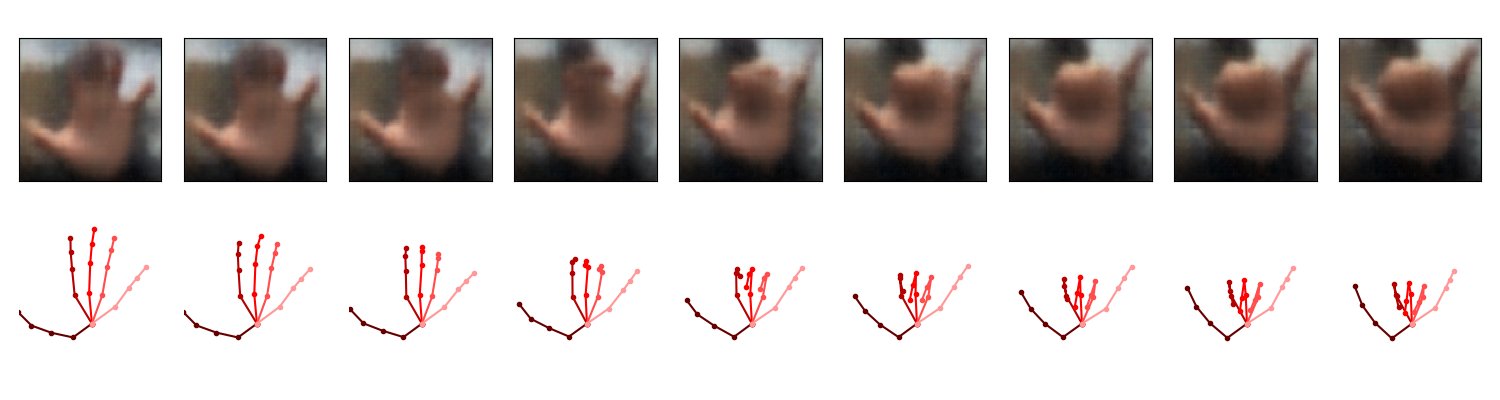

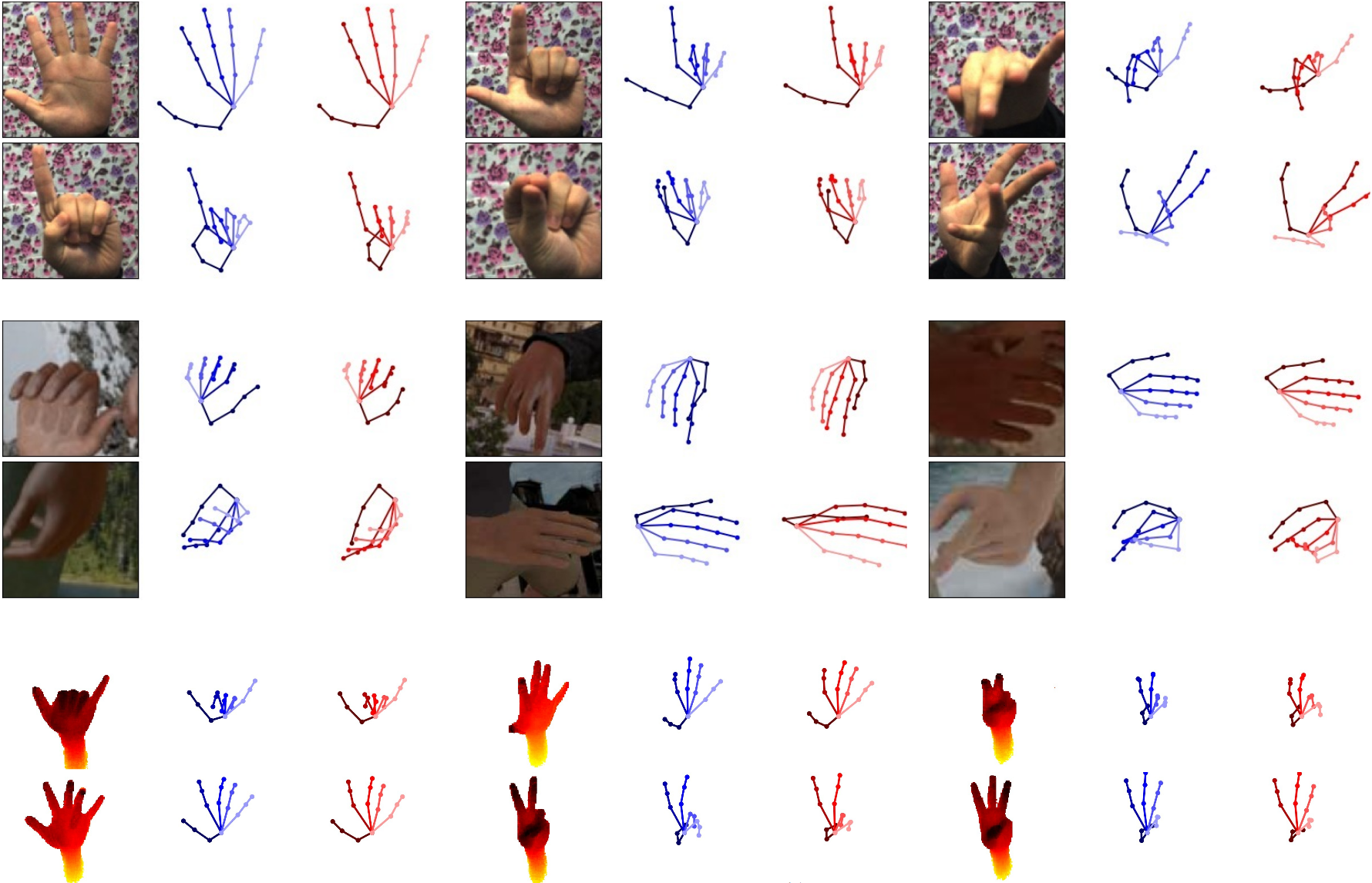

We develop a new a novel approach to estimate the full 3D joint position of the hand from soley monocular RGB images. Our approach learns a manifold of valid hand poses with the help of a Variational Autoencoder inwhich an RGB image can be projected into to retrieve the displayed hand configuration, reaching state-of-the-art performance on the tested data sets. (Top row) Latent space walk: Due to the learned manifold, we are capable of generating new labeled data pairs. (2nd/3rd row) Sample RGB predictions: Predictions of our model on real and synthetic data. (Bottom row) Sample depth predictions: Our approach performs competitive to related work on depth data with minimal modification of the architecture.

Abstract

The human hand moves in complex and high-dimensional ways, making estimation of 3D hand pose configurations from images alone a challenging task. In this work we propose a method to learn a statistical hand model represented by a cross-modal trained latent space via a generative deep neural network. We derive an objective function from the variational lower bound of the VAE framework and jointly optimize the resulting cross-modal KL-divergence and the posterior reconstruction objective, naturally admitting a training regime that leads to a coherent latent space across multiple modalities such as RGB images, 2D keypoint detections or 3D hand configurations. Additionally, it grants a straightforward way of using semi-supervision. This latent space can be directly used to estimate 3D hand poses from RGB images, outperforming the state-of-the art in different settings. Furthermore, we show that our proposed method can be used without changes on depth images and performs comparably to specialized methods. Finally, the model is fully generative and can synthesize consistent pairs of hand configurations across modalities. We evaluate our method on both RGB and depth datasets and analyze the latent space qualitatively.