Abstract

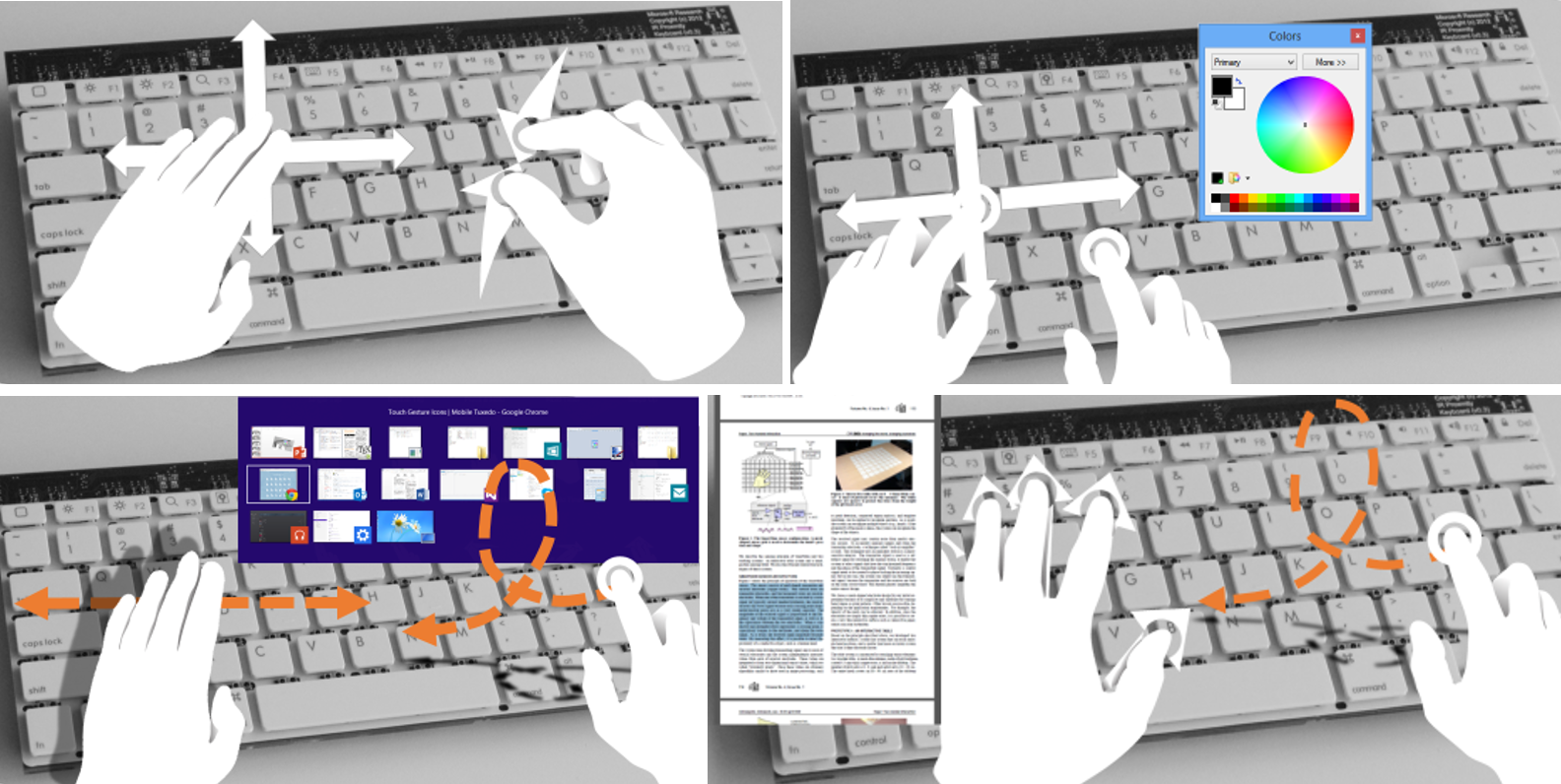

We present a new type of augmented mechanical keyboard, capable of sensing rich and expressive motion gestures performed both on and directly above the device. Our hardware comprises of low-resolution matrix of infrared (IR) proximity sensors interspersed between the keys of a regular mechanical keyboard. This results in coarse but high frame-rate motion data.

We extend a machine learning algorithm, traditionally used for static classification only, to robustly support dynamic, temporal gestures. We propose the use of motion signatures a technique that utilizes pairs of motion history images and a random forest based classifier to robustly recognize a large set of motion gestures on and directly above the keyboard. Our technique achieves a mean per-frame classification accuracy of 75.6% in leave--one--subject--out and 89.9% in half-test/half-training cross-validation.

We detail our hardware and gesture recognition algorithm, provide performance and accuracy numbers, and demonstrate a large set of gestures designed to be performed with our device. We conclude with qualitative feedback from users, discussion of limitations and areas for future work.

Accompanying Video

ACM Digital Library

CHI '14 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2014. Best Paper Award.