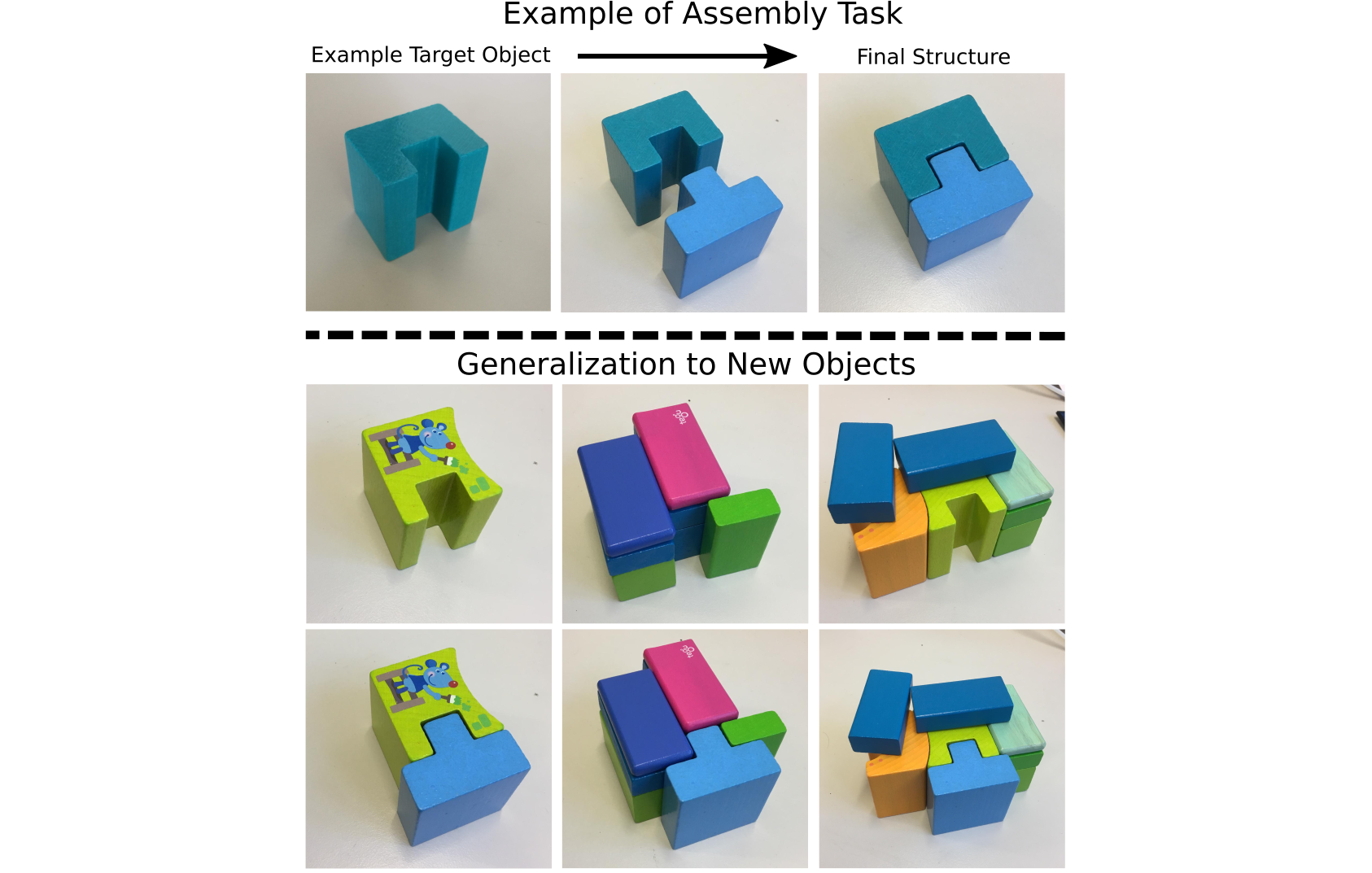

Example of an assembly task. Given a target object, the manipulation block should be placed to obtain a compound structure. Bottom: Given the example on the top, generalization should be possible as shown in the two bottom rows.

Abstract

In this letter we propose a robotic vision task with the goal of enabling robots to execute complex assembly tasks in unstructured environments using a camera as the primary sensing device. We formulate the task as an instance of 6D pose estimation of template geometries, to which manipulation objects should be connected. In contrast to the standard 6D pose estimation task, this requires reasoning about local geometry that is surrounded by arbitrary context, such as a power outlet embedded into a wall. We propose a deep learning based approach to solve this task alongside a novel dataset that will enable future work in this direction and can serve as a benchmark. We experimentally show that state-of-the-art 6D pose estimation methods alone are not sufficient to solve the task but that our training procedure significantly improves the performance of deep learning techniques in this context.

Accompanying Video

Downloads

Dataset

We release the dataset collected for the purposes of this project. For more details on how to use the dataset, please refer to the example code on Github. We are currently working on a solution for hosting the dataset online. At the moment, the dataset is available on request. Please contact the authors for further details.