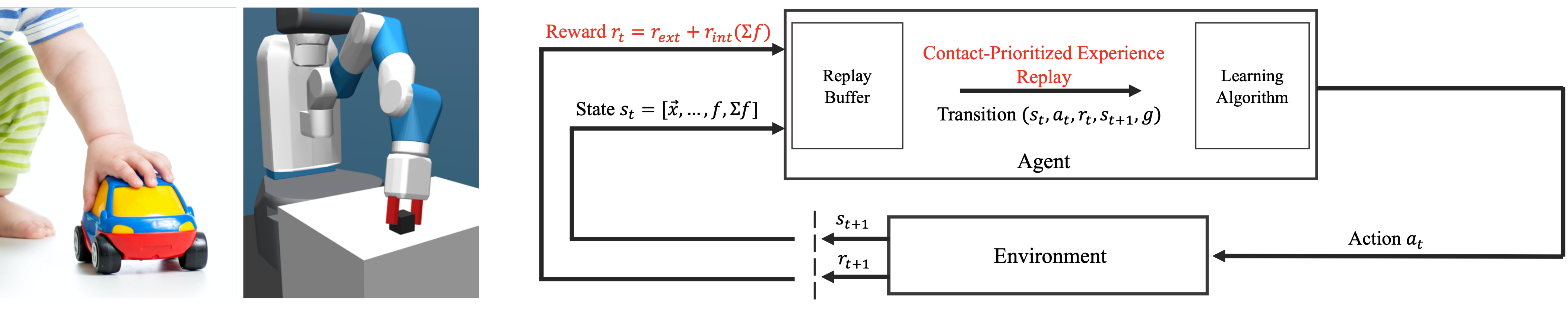

Inspiration of our work comes from intrinsic motivation of infants exploring the world through physical interactions. We model tactile sensing with force sensors (red, left image) and leverage tactile information to accelerate learning. We therefore introduce an intrinsic reward based on tactile signals and introduce a sampling prioritization scheme to enhance the exploration efficiency of deep reinforcement learning for robot manipulation tasks.

Abstract

In this paper we address the challenge of exploration in deep reinforcement learning for robotic manipulation tasks. In sparse goal settings, an agent does not receive any positive feedback until randomly achieving the goal, which becomes infeasible for longer control sequences. Inspired by touch-based exploration observed in children, we formulate an intrinsic reward based on the sum of forces between a robots force sensors and manipulation objects that encourages physical interaction. Furthermore, we introduce contact-prioritized experience replay, a sampling scheme that prioritizes contact rich episodes and transitions. We show that our solution accelerates the exploration and outperforms state-of-the-art methods on three fundamental robot manipulation benchmarks.