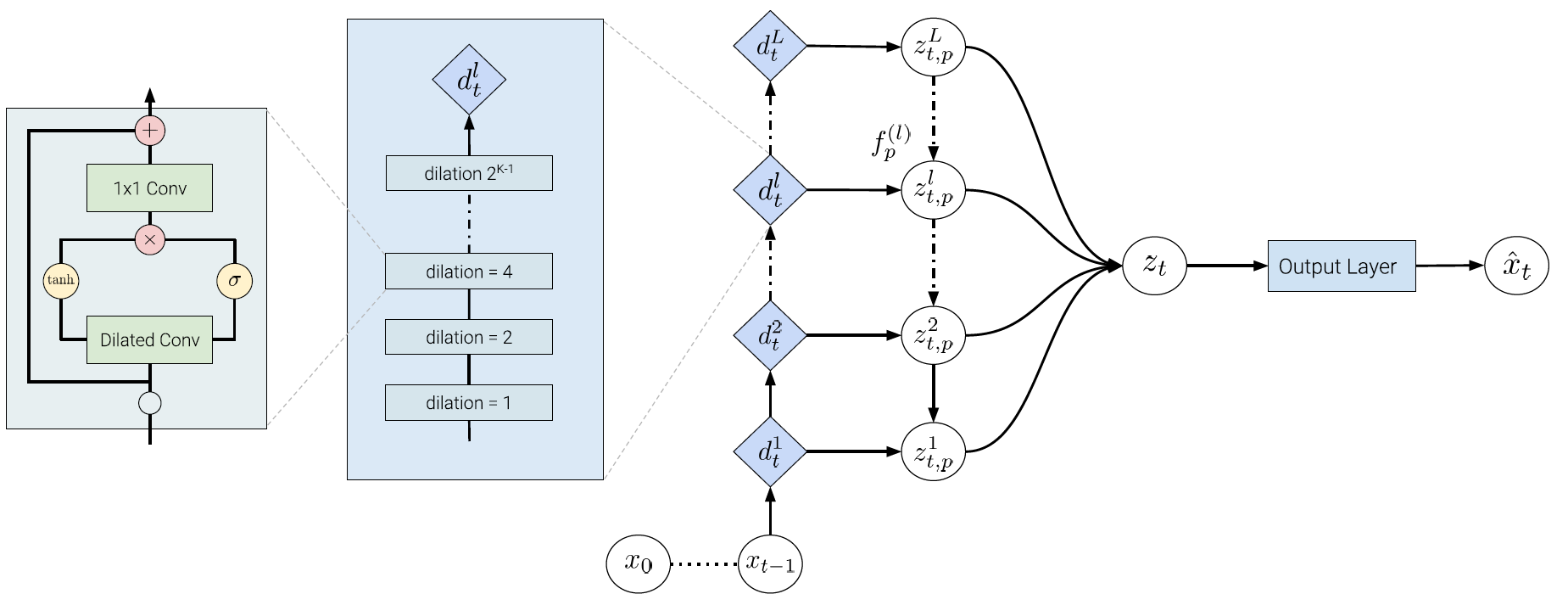

In STCN, the latent random variables are arranged in correspondence to the temporal hierarchy of the temporal convolutional network (TCN) blocks which effectively distributes the random variables over the various timescales. Crucially, our hierarchical latent structure is designed to be a modular add-on for any temporal convolutional network architecture. Separating the deterministic and stochastic layers allows us to build STCNs without requiring modifications to the base TCN architecture, and hence retains the scalability of TCNs with respect to the receptive field.

Abstract

Convolutional architectures have recently been shown to be competitive on many sequence modelling tasks when compared to the de-facto standard of recurrent neural networks (RNNs), while providing computational and modeling advantages due to inherent parallelism. However, currently there remains a performance gap to more expressive stochastic RNN variants, especially those with several layers of dependent random variables. In this work, we propose stochastic temporal convolutional networks (STCNs), a novel architecture that combines the computational advantages of temporal convolutional networks (TCN) with the representational power and robustness of stochastic latent spaces. In particular, we propose a hierarchy of stochastic latent variables that captures temporal dependencies at different time-scales. The architecture is modular and flexible due to decoupling of deterministic and stochastic layers. We show that the proposed architecture achieves state of the art log-likelihoods across several tasks. Finally, the model is capable of predicting high-quality synthetic samples over a long-range temporal horizon in modeling of handwritten text.