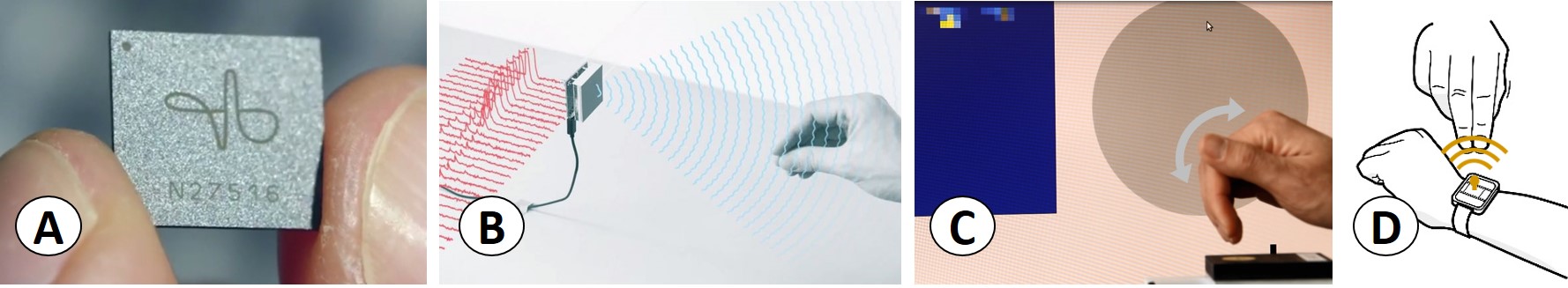

We explore interactive possibilities enabled by Google's project Soli (A), a solid-state short-range radar, capturing energy reflected of hands and other objects (B). The signal is unique in that it resolves motion in the millimeter range but does not directly capture shape (C). We propose a novel gesture recognition algorithm specifically designed to recognize subtle, low-effort gestures based on the Soli signal. Code and dataset for our UIST 2016 publication is on GitHub.</dd>

</p>

This paper proposes a novel machine learning architecture,

specifically designed for radio-frequency based gesture

recognition. We focus on high-frequency (60 GHz), shortrange

radar based sensing, in particular Google’s Soli sensor.

The signal has unique properties such as resolving motion

at a very fine level and allowing for segmentation in range

and velocity spaces rather than image space. This enables

recognition of new types of inputs but poses significant difficulties

for the design of input recognition algorithms. The

proposed algorithm is capable of detecting a rich set of dynamic

gestures and can resolve small motions of fingers in

fine detail. Our technique is based on an end-to-end trained

combination of deep convolutional and recurrent neural networks.

The algorithm achieves high recognition rates (avg

87%) on a challenging set of 11 dynamic gestures and generalizes

well across 10 users. The proposed model runs on

commodity hardware at 140 Hz (CPU only).

Abstract

Video