Abstract

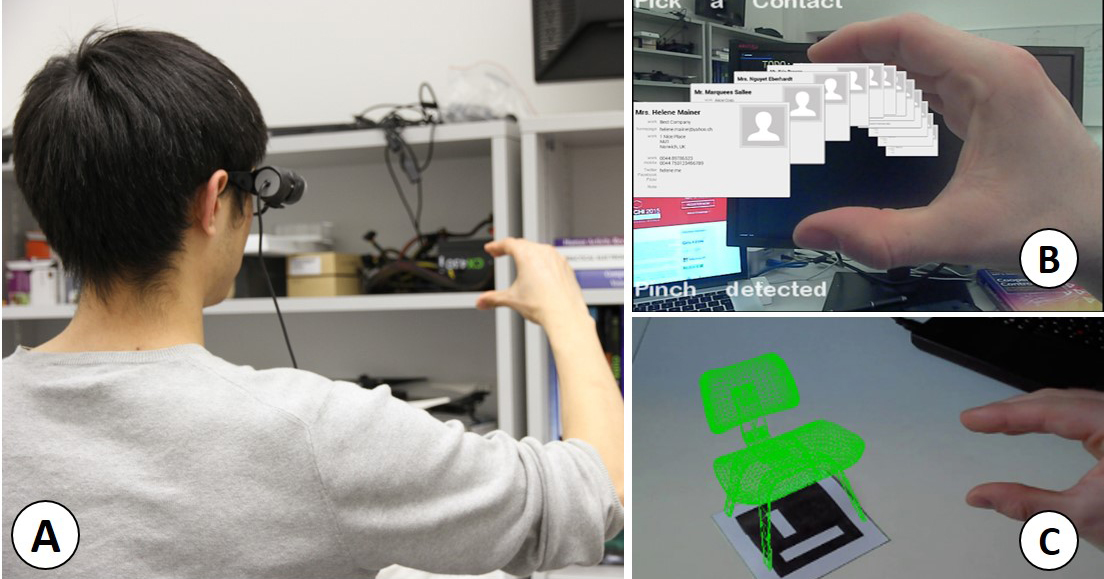

We present a machine learning technique to recognize gestures and estimate metric depth of hands for 3D interaction, relying only on monocular RGB video input. We aim to enable spatial interaction with small, body-worn devices where rich 3D input is desired but the usage of conventional depth sensors is prohibitive due to their power consumption and size. We propose a hybrid classification-regression approach to learn and predict a mapping of RGB colors to absolute, metric depth in real time. We also classify distinct hand gestures, allowing for a variety of 3D interactions. We demonstrate our technique with three mobile interaction scenarios and evaluate the method quantitatively and qualitatively.