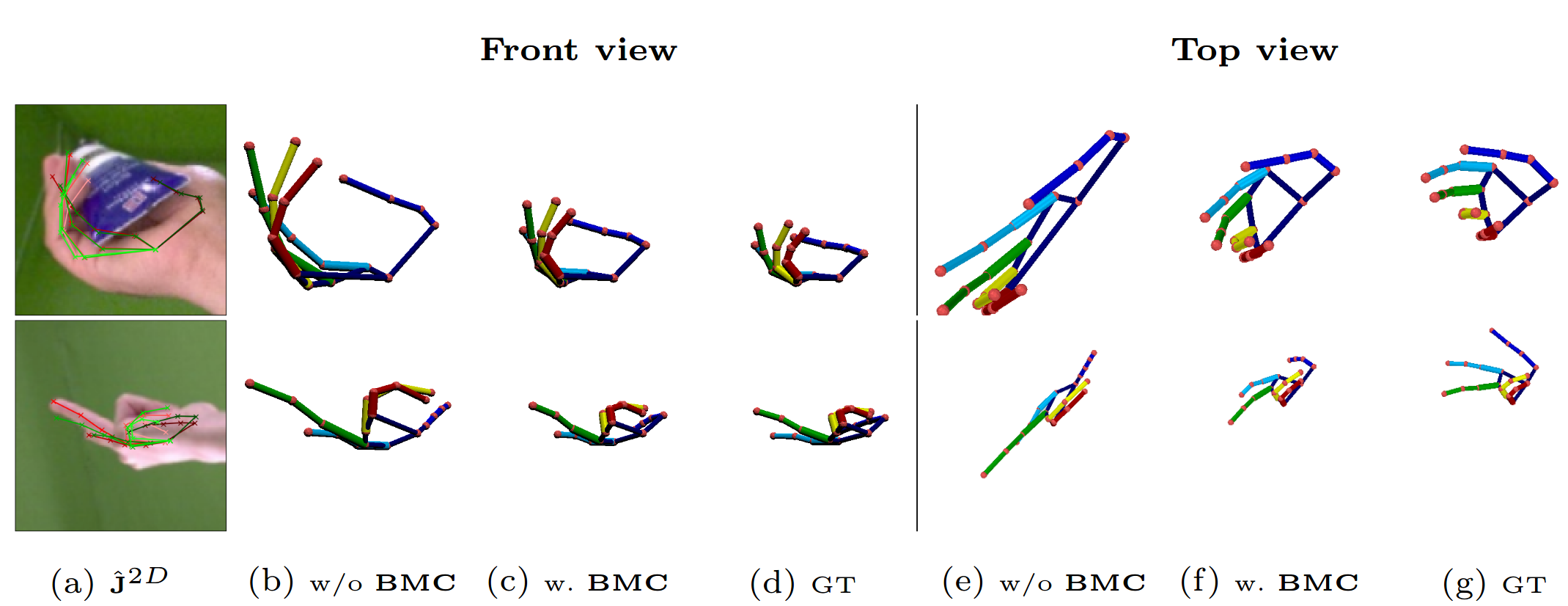

Training on additional weakly supervised data holds potential to alleviate the 3D data scarcity and improve generalization. (b,e) However, we find that this yields 3D poses with correct 2D projections, yet anatomically implausible 3D configurations. (c,f) Adding our biomechanical constraints (BMC) significantly improves the pose prediction quantitatively and qualitatively. The resulting 3D poses are anatomically valid and display more accurate depth/scale even under severe self- and object occlusions, thus are closer to the ground-truth (d,g). To constrain the prediction, we propose a novel method for decomposing 3D hand pose into its biomechanical constituents without the need of inverse kinematics. Using this representation allows us to constrain the hand in a differentiable manner.

Abstract

Estimating 3D hand pose from 2D images is a difficult, inverse problem due to the inherent scale and depth ambiguities. Current state-of-the-art methods train fully supervised deep neural networks with 3D ground-truth data. However, acquiring 3D annotations is expensive, typically requiring calibrated multi-view setups or labour intensive manual annotations. While annotations of 2D keypoints are much easier to obtain, how to efficiently leverage such weakly-supervised data to improve the task of 3D hand pose prediction remains an important open question. The key difficulty stems from the fact that direct application of additional 2D supervision mostly benefits the 2D proxy objective but does little to alleviate the depth and scale ambiguities. Embracing this challenge we propose a set of novel losses that constrain the prediction of a neural network to lie within the range of biomechanically feasible 3D hand configurations. We show by extensive experiments that our proposed constraints significantly reduce the depth ambiguity and allow the network to more effectively leverage additional 2D annotated images. For example, on the challenging freiHAND dataset, using additional 2D annotation without our proposed biomechanical constraints reduces the depth error by only 15%, whereas the error is reduced significantly by 50% when the proposed biomechanical constraints are used.

Accompanying video

Downloads

Ackowledgments

Adrian Spurr carried out this work during his internship at NVIDIA.